I am currently a Postdoctoral Fellow at MMLab, the Chinese University of Hong Kong, and at CPII, working with Prof. Xiangyu Yue.

My current research interests lie in vision-language learning, multimodal large language models (MLLMs), and embodied agents. (1) During my graduate studies, I focused on building intelligent perception models that understand visual and linguistic information. (2) More recently, I’ve been exploring decision-making systems capable of actively interacting with both humans and dynamic environments. Ultimately, my goal is to develop human-like agents that can perceive real-world environments and make autonomous decisions, moving us closer to achieving artificial general intelligence (AGI). Two articles that have deeply inspired my thinking are The Bitter Lesson and The Second Half.

I am always open to collaboration and discussions about the latest advancements in the field. Feel free to reach out!

🔥 News

- 2026.02: 🎉 🎉 One paper (GEGround) is accpeted by CVPR Findings 2026. It ranks first on EmbodiedScan Challenge.

- 2026.01: 🎉 🎉 One paper (AutoFly) is accpeted by ICLR 2026.

- 2025.09: I join in CUHK MMLab and CPII as a Postdoctoral Fellow.

- 2025.06: One paper (RAGNet) is accepted by ICCV2025.

- 2025.06: I successfully defended my Ph.D. thesis. I'm awarded Outstanding Graduates of Beijing (北京市优秀毕业生).

- 2025.02: One paper (DrivingSphere) is accepted by CVPR2025.

- 2024.12: One paper (NuPrompt) is accepted by AAAI2025.

- 2024.05: I'm awarded Excellent Doctoral Thesis Seedling Fund (优秀博士论文育苗基金).

- 2024.01: One paper (TopoMLP) is accepted by ICLR2024.

📝 Publications

Conference & Preprint

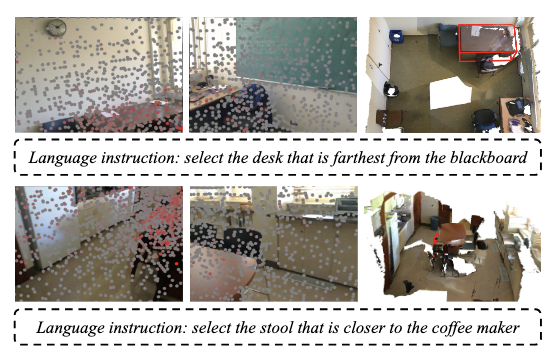

DEGround: An Effective Baseline for Ego-centric 3D Visual Grounding With a Homogeneous Framework

Yani Zhang*, Dongming Wu*, Yingfei Liu, Hao Shi, Tiancai Wang, Xingping Dong (*Equal Contributions)

- DEGround ranks first on EmbodiedScan Challenge.

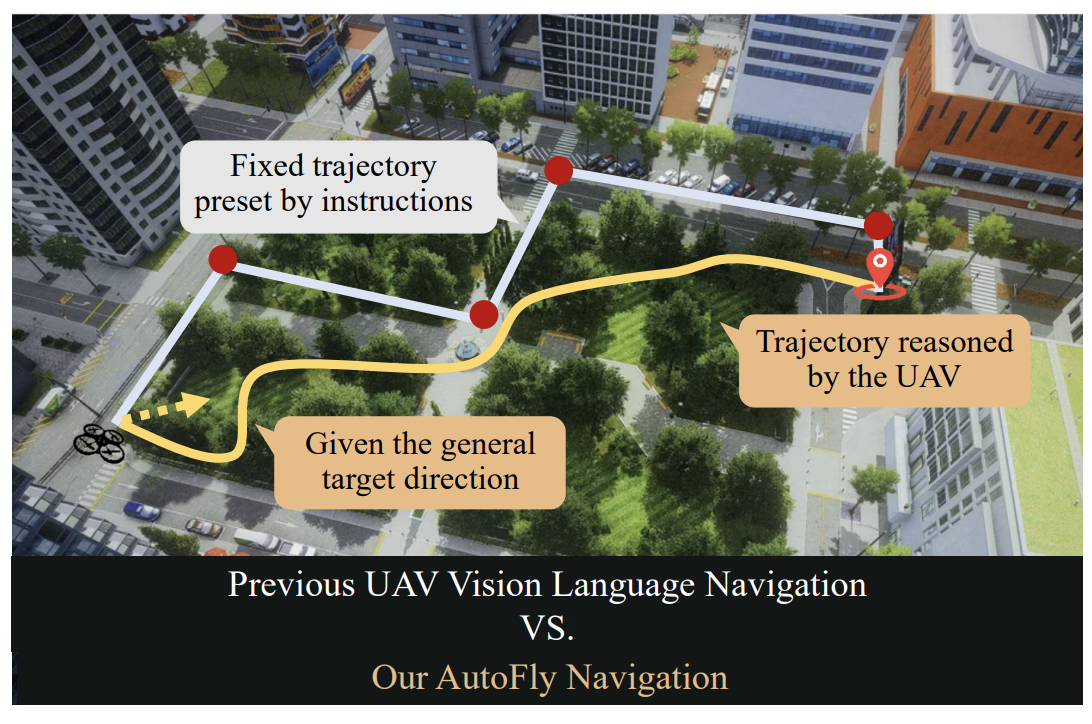

AutoFly: Vision-Language-Action Model for UAV Autonomous Navigation in the Wild

Xiaolou Sun, Wufei Si, Wenhui Ni, Yuntian Li, Dongming Wu, Fei Xie, Runwei Guan, He-Yang Xu, Henghui Ding, Yuan Wu, Yutao Yue, Yongming Huang, Hui Xiong

- AutoFly is a Vision-Language-Action (VLA) model for autonomous UAV navigation in real-world environments, with a pseudo-depth encoder and progressive two-stage training.

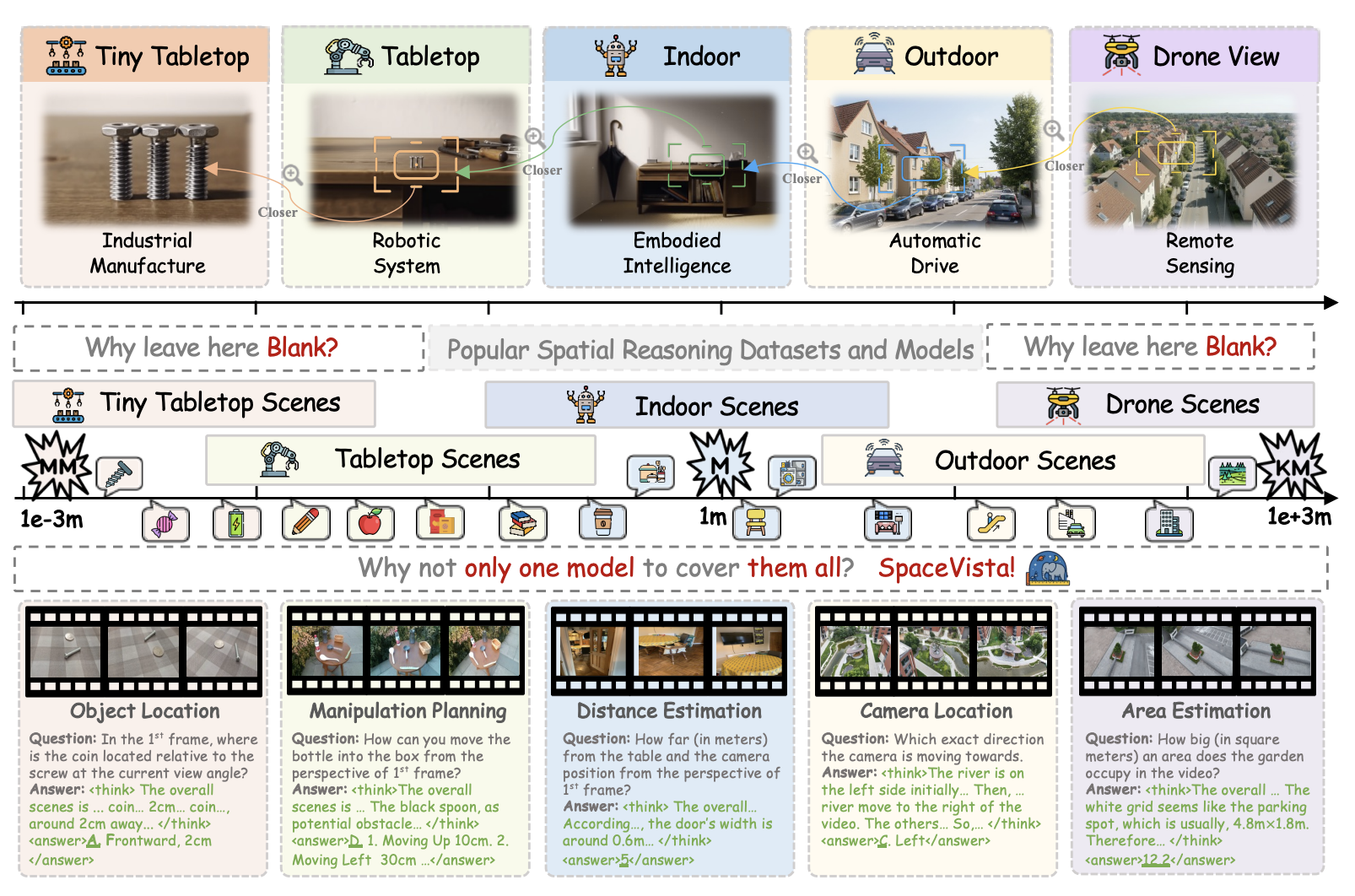

SpaceVista: All-Scale Visual Spatial Reasoning from mm to km

Peiwen Sun, Shiqiang Lang, Dongming Wu, Yi Ding, Kaituo Feng, Huadai Liu, Zhen Ye, Rui Liu, Yun-Hui Liu, Jianan Wang, Xiangyu Yue

|Paper|

- It is the first attempt to broaden the all-scale spatial intelligence of MLLMs to the best of our knowledge.

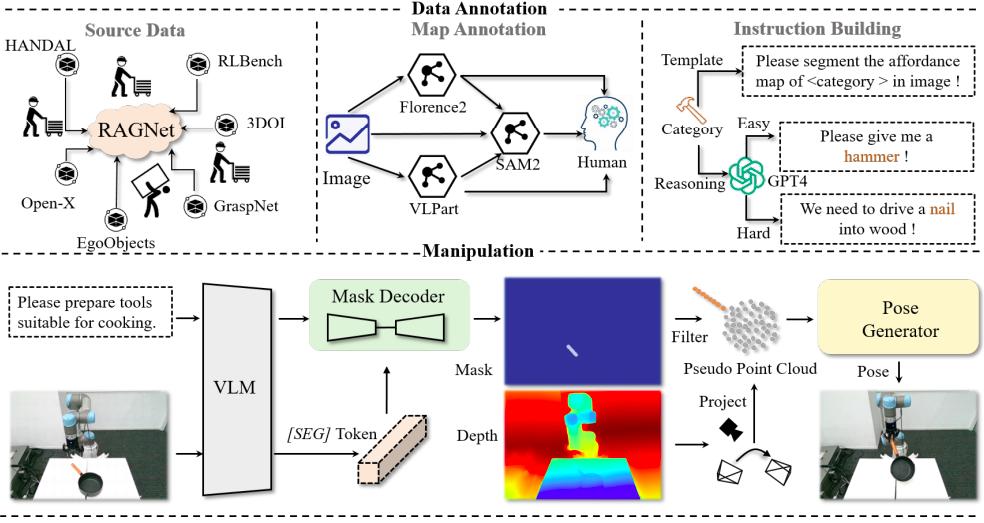

RAGNet: Large-scale Reasoning-based Affordance Segmentation Benchmark towards General Grasping

Dongming Wu, Yanping Fu, Saike Huang, Yingfei Liu, Fan Jia, Nian Liu, Feng Dai, Tiancai Wang, Rao Muhammad Anwer, Fahad Shahbaz Khan, Jianbing Shen

- We present a large-scale reasoning-based affordance segmentation benchmark RAGNet and introduce a comprehensive affordance-based grasping framework AffordanceNet.

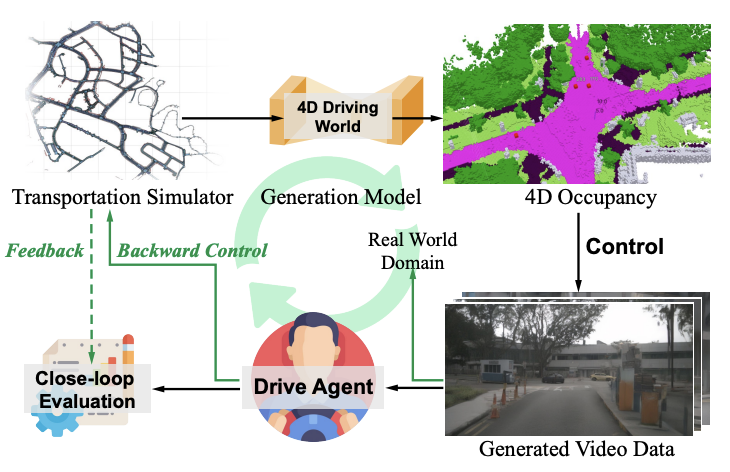

DrivingSphere: Building a High-fidelity 4D World for Closed-loop Simulation

Tianyi Yan, Dongming Wu, Wencheng Han, Junpeng Jiang, Xia Zhou, Kun Zhan, Cheng-zhong Xu, Jianbing Shen

- DrivingSphere is a novel geometry-aware closed-loop simulation framework that captures 2D visual and 3D geometric properties while seamlessly integrating with vision-based end-to-end driving agents.

Is a 3D-Tokenized LLM the Key to Reliable Autonomous Driving?

Yifan Bai*, Dongming Wu*, Yingfei Liu, Fan Jia, Weixin Mao, Ziheng Zhang, Yucheng Zhao, Jianbing Shen, Xing Wei, Tiancai Wang, Xiangyu Zhang (*Equal Contributions)

|Paper|

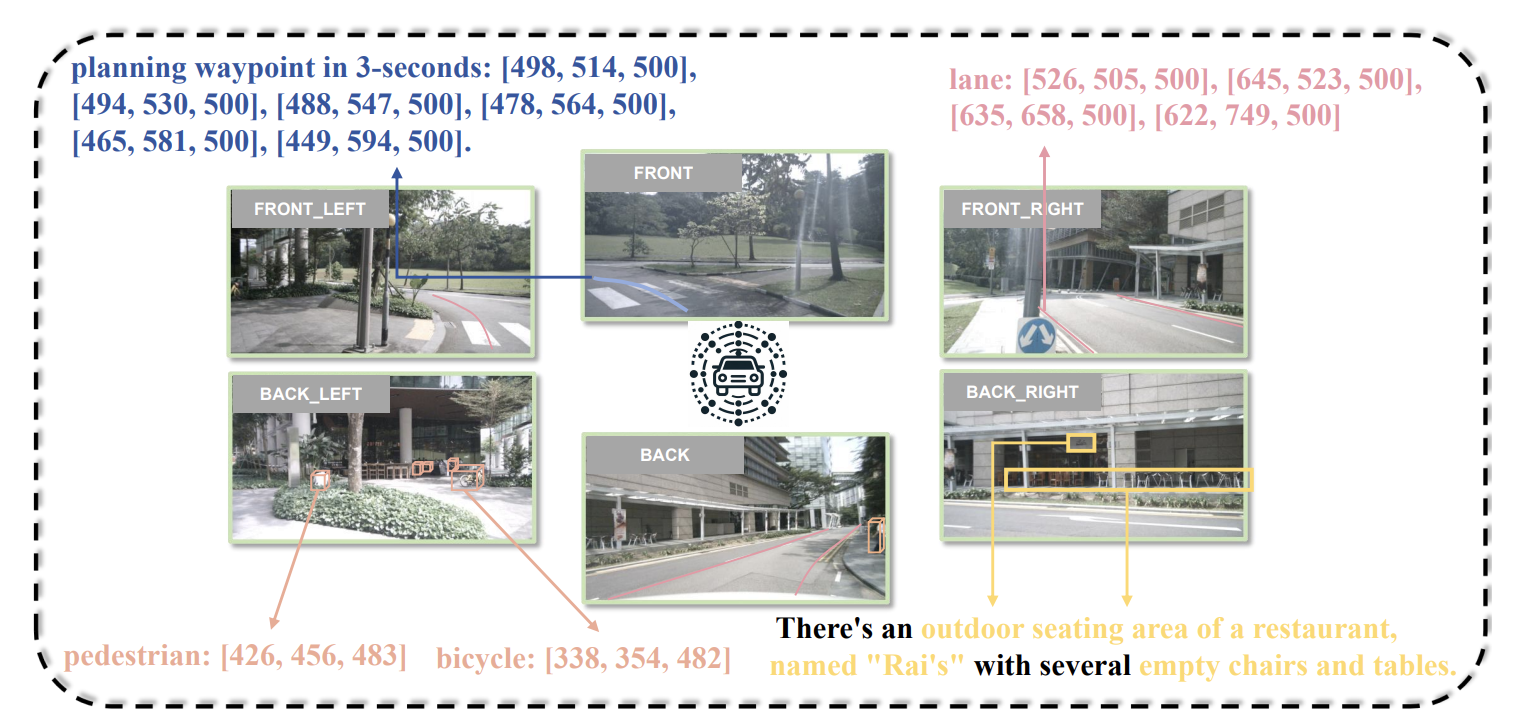

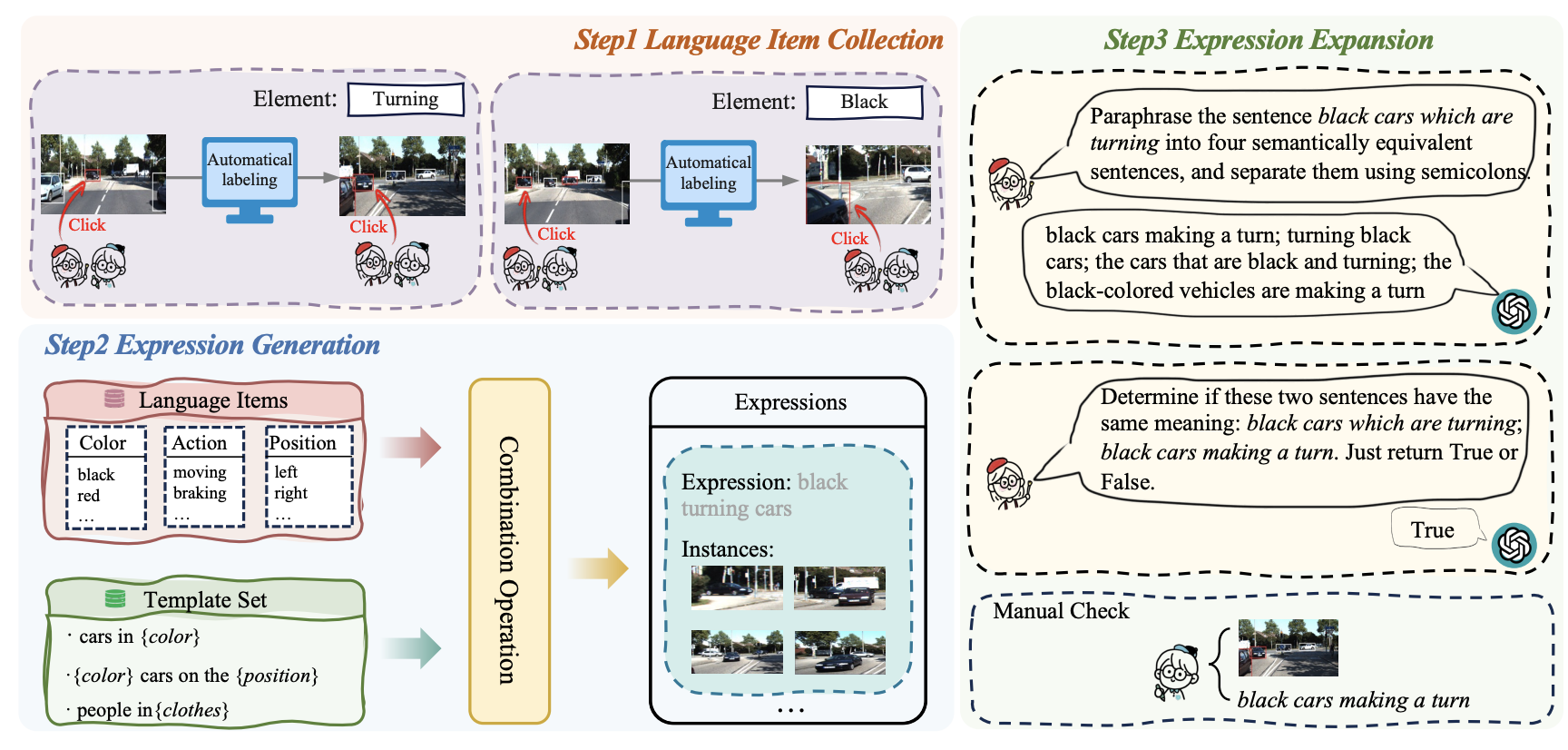

Language prompt for autonomous driving

Dongming Wu, Wencheng Han, Tiancai Wang, Yingfei Liu, Xiangyu Zhang, Jianbing Shen

- We propose a new large-scale language prompt set for driving scenes, named NuPrompt. As far as we know, it is the first dataset specializing in multiple 3D objects of interest from video domain.

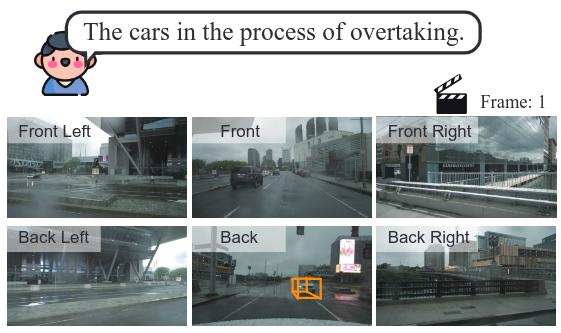

Bootstrapping Referring Multi-Object Tracking

Yani Zhang*, Dongming Wu*, Wencheng Han, Xingping Dong (*Equal Contributions)

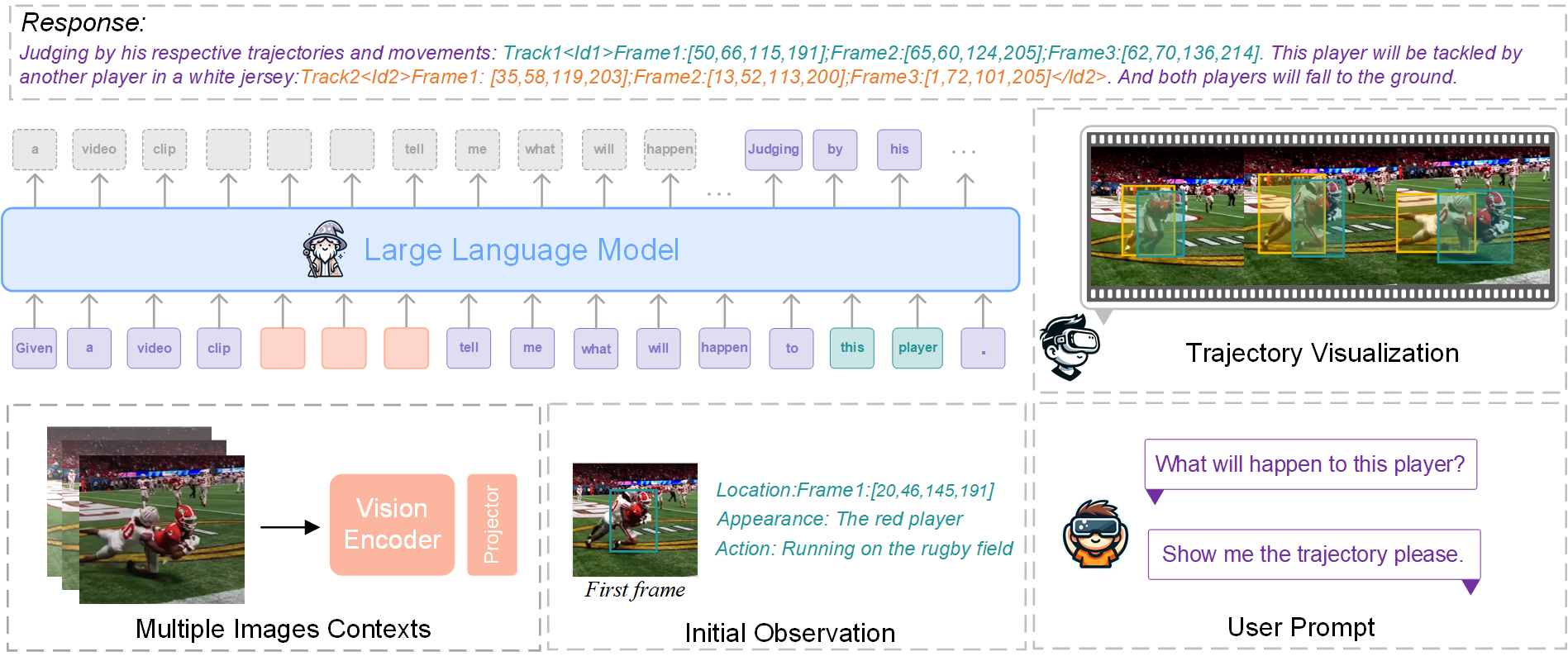

Merlin: Empowering Multimodal LLMs with Foresight Minds

En Yu, Liang Zhao, Yana Wei, Jinrong Yang, Dongming Wu, Lingyu Kong, Haoran Wei, Tiancai Wang, Zheng Ge, Xiangyu Zhang, Wenbing Tao

- Merlin is a groundbreaking model capable of generating natural language responses that are intricately linked with object trajectories of multiple images.

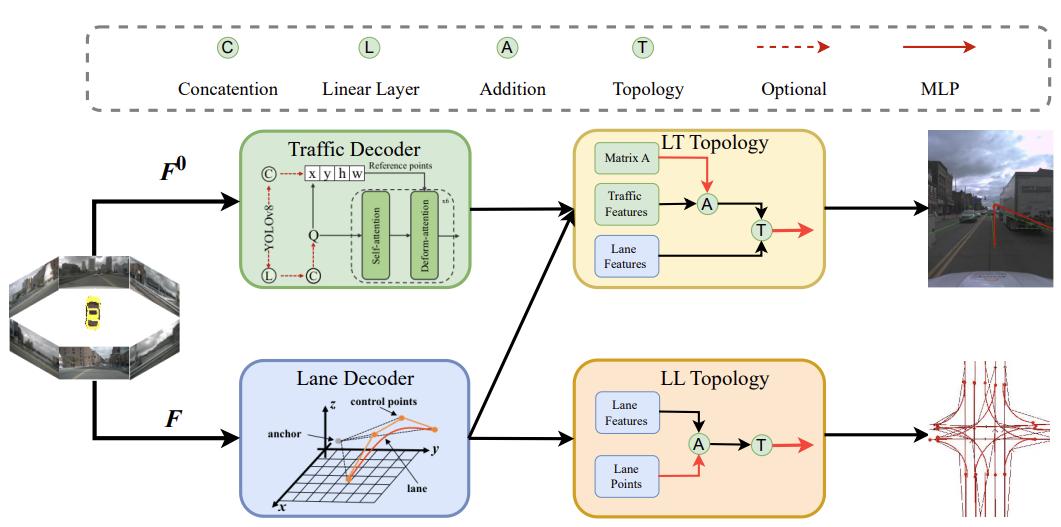

TopoMLP: A Simple yet Strong Pipeline for Driving Topology Reasoning

Dongming Wu, Jiahao Chang, Fan Jia, Yingfei Liu, Tiancai Wang, Jianbing Shen

- TopoMLP is the 1st solution for 1st OpenLane Topology in Autonomous Driving Challenge. It suggests a first-detect-then-reason philosophy for better topology prediction.

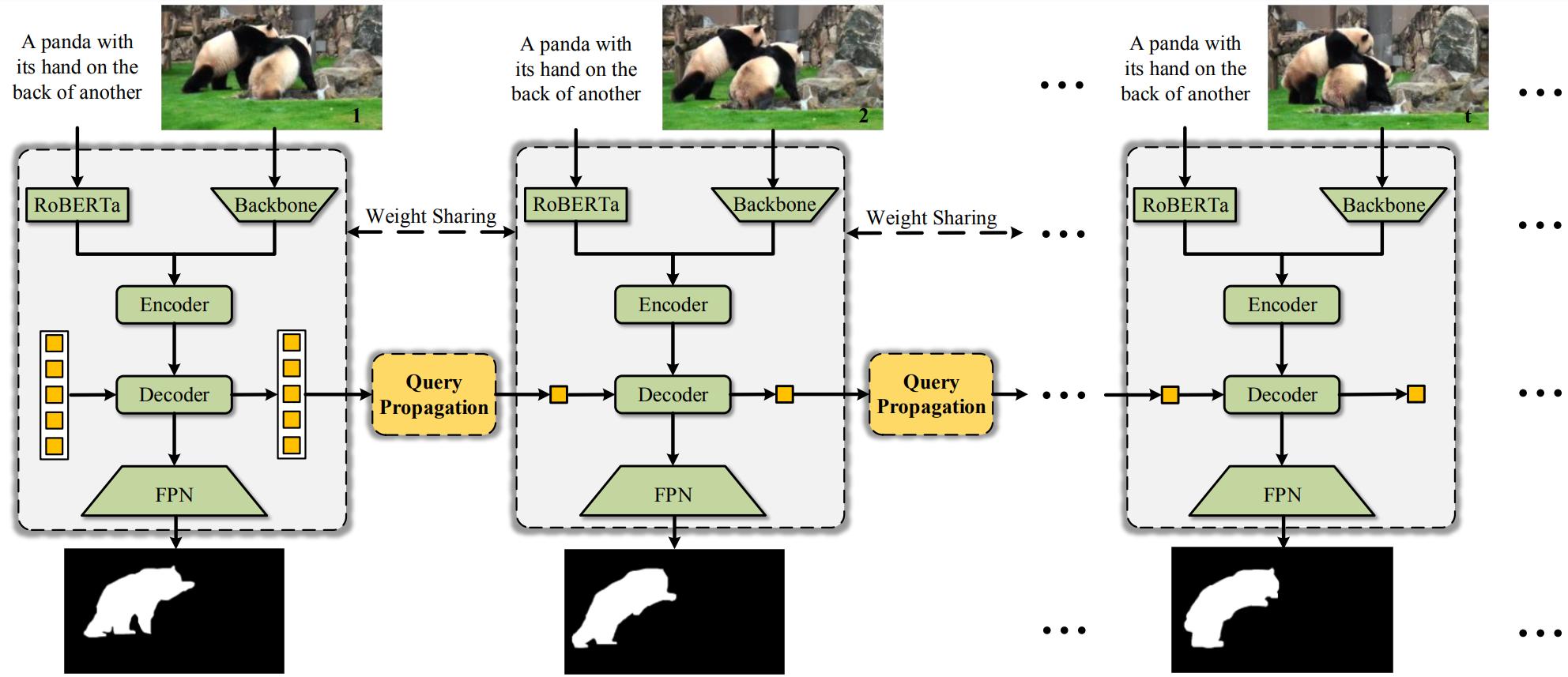

OnlineRefer: A Simple Online Baseline for Referring Video Object Segmentation

Dongming Wu, Tiancai Wang, Yuang Zhang, Xiangyu Zhang, Jianbing Shen

- OnlineRefer is the first to challenge the widespread belief that only offline models can deal well with RVOS and makes online RVOS great again.

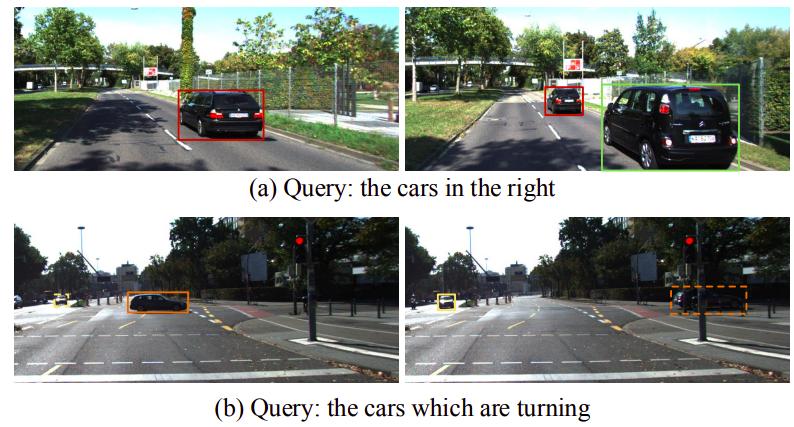

Referring Multi-Object Tracking

Dongming Wu, Wencheng Han, Tiancai Wang, Xingping Dong, Xiangyu Zhang, Jianbing Shen

- RMOT is a new referring understanding task that can detect and track an arbitrary number of objects following human instruction. We propose the first RMOT benchmark Refer-KITTI, and a baseline model TransRMOT.

Multi-Level Representation Learning with Semantic Alignment for Referring Video Object Segmentation

Dongming Wu, Xingping Dong, Ling Shao, Jianbing Shen

|Paper|

Journal

- Person re-identification by context-aware part attention and multi-head collaborative learning (TIFS). Dongming Wu, Mang Ye, Gaojie Lin, Xin Gao, Jianbing Shen. 2021. Paper

- Reducing estimation bias via triplet-average deep deterministic policy gradient (TNNLS). Dongming Wu, Xingping Dong, Jianbing Shen, Steven CH Hoi. 2020. Paper

Technical Report

- The 1st-place Solution for CVPR 2023 OpenLane Topology in Autonomous Driving Challenge. Dongming Wu, Fan Jia, Jiahao Chang, Zhuoling Li, Jianjian Sun, Chunrui Han, Shuailin Li, Yingfei Liu, Zheng Ge, Tiancai Wang. 2023. Paper | Code

🎖 Honors

- Excellent Doctoral Dissertation Beijing Institute of Technology (北京理工大学优秀博士论文)

- Outstanding Graduates of Beijing (北京市优秀毕业生)

- Outstanding Graduates of Beijing Institute of Technology (北京理工大学优秀毕业生)

- Excellent Doctoral Dissertation Seedling Fund of Beijing Institute of Technology (北京理工大学优秀博士论文育苗基金)

- National Scholarship (国家奖学金), Ministry of Education China

- ChinaCentury Scholarship (华瑞世纪奖学金), Beijing Institute of Technology

🏆 Challenge Awards

- The 1st place at EmbodiedScan Challenge, Shanghai AI Lab

- The 1st place at OpenLane Topology in CVPR2023 Autonomous Driving Challenge ($15,000), Shanghai AI Lab and Huawei

📖 Educations

- 2025.06, PhD, Department of Computer Science, Beijing Institute of Technology, advised by Prof. Jianbing Shen.

- 2019.06, Bachelor, Class of Xu, Beijing Institute of Technology.

💻 Internships

Dexmal, Research Intern. Mentor: Yingfei Liu, Tiancai Wang.

Dexmal, Research Intern. Mentor: Yingfei Liu, Tiancai Wang. MBZUAI, Visiting Student. Mentor: Prof. Rao Muhammad Anwer, Prof. Fahad Shahbaz Khan.

MBZUAI, Visiting Student. Mentor: Prof. Rao Muhammad Anwer, Prof. Fahad Shahbaz Khan. MEGVII, Research Intern. Mentor: Tiancai Wang, Xiangyu Zhang.

MEGVII, Research Intern. Mentor: Tiancai Wang, Xiangyu Zhang. IIAI, Research Intern. Mentor: Xingping Dong, Prof. Ling Shao.

IIAI, Research Intern. Mentor: Xingping Dong, Prof. Ling Shao.

🔧 Service

Conferences:

- IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)

- IEEE International Conference on Computer Vision (ICCV)

- European Conference on Computer Vision (ECCV)

- Conference on Neural Information Processing Systems (NeurIPS)

- International Conference on Learning Representations (ICLR)

- AAAI Conference on Artificial Intelligence (AAAI)

Journals:

- International Journal of Computer Vision (IJCV)

- IEEE Transactions on Image Processing (TIP)

- IEEE Transactions on Neural Networks and Learning Systems (TNNLS)

- IEEE Transactions on Multimedia (TMM)

- IEEE Transactions on Circuits and Systems for Video Technology (TCSVT)

- IEEE Transactions on Intelligent Vehicles (TIV)

- Pattern Recognition (PR)

- Neurocomputing